Human-First AI that gives you control.

When it comes to the security and privacy of your customer data, Gainsight has always been four steps ahead. We’ve applied the same security measures used to handle our customers enterprise data to our generative AI features.

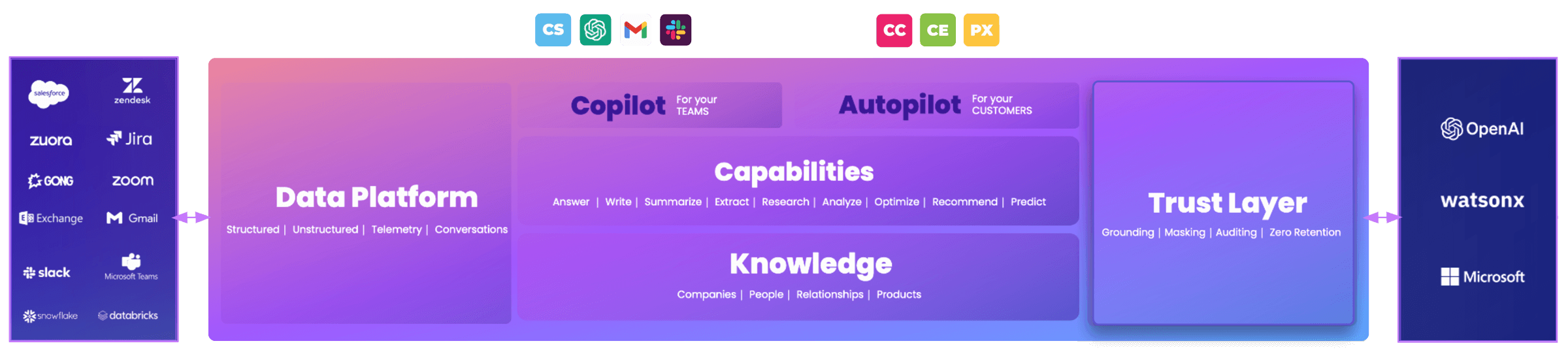

Gainsight products leverage two types of AI features: those built internally, and those for which we share the data with another AI provider. Internally built features are designed against all the same data security and privacy protocols our customers trust Gainsight with across our non-AI features. We hold the AI providers we work with to the same standards, outlined below.

AI Data Security and Privacy

Our Trust Layer encompasses a set of robust security measures and protocols that ensure the security, reliability, and compliance of Gainsight AI. This foundational layer ensures confident and safe use of AI within Gainsight products by addressing key security and privacy areas around data protection, including a zero data retention policy and no training of LLMs, complete privacy with no cross-tenant data transfer, grounding to ensure accuracy of outputs, auditing and completion of a Data Protection Impact Assessment (DPIA) for every AI feature, and relevant PII masking.

We perform a detailed DPIA for each AI vendor/provider we work with, send a Notice for Consent to our customers, and complete an annual third-party risk assessment by our security team.

Gainsight AI Trust Layer

-

Gainsight AI Ethical Principles

- Bias Mitigation: Gainsight performs human reviews and regular audits on our AI models, and enables clients to provide feedback on decisions made by our Generative AI features. We incorporate this feedback into regular updates to the AI models that we use to deliver these Generative AI features.

- Transparency: Gainsight provides clients with clear documentation (including making AI Model Cards available to our clients) in respect to the Models used to deliver our Generative AI features. This documentation includes information on the data sets being used, processing information for the data, and the existence of human intervention in case of any output errors.

- Accountability: Gainsight performs real-time monitoring of all Generative AI features in addition to user feedback on performance. Additionally, Gainsight has an Incident Response Plan in place and this Plan is reviewed and tested on at least an annual basis.

- Security: Gainsight’s Generative AI Features are afforded the same technical and organizational security measures as other Gainsight service features. Information on these measures can be found here.

- Fairness: To ensure fairness in the development of our Generative AI features, feedback loops allow users and impacted groups to flag unfair outcomes. The development of our Generative AI features involves the engagement of diverse internal Gainsight teams (e.g. engineering, AI, and product) throughout the development process for their respective input and viewpoints, along with regular monitoring and testing of fairness of these features in reports.

-

Privacy Review

We currently use three providers for our Gainsight AI features: IBM watsonx , Microsoft Azure for its GPT APIs, and Open AI.

- Prior to onboarding each of these vendors, our Privacy Team performed a thorough Data Protection Impact Assessment (DPIA) and our Security Team performed a thorough vendor security review as part of third-party risk management.

- Before implementation, subprocessor notices are sent to all of our customers providing them with an objection window. If a customer objects to the use of a vendor, we will use a different subprocessor or turn off the features that are dependent on that vendor from the backend. The only way to re-activate these features is for your Privacy/Legal Team to reach out to our Privacy Team.

- We ensure that our vendors are GDPR compliant, and your data is processed in accordance with GDPR. In alignment with GDPR, EU customers AI features will only be processed using Microsoft Azure and data will only be processed on EU servers.

- All data processed by any of these vendors will be subject to a data retention policy and your data is NOT stored outside of Gainsight. The abuse monitoring data retention policy within Microsoft Azure and OpenAI has been turned off for Gainsight.

-

Security Measures

- Our initial Security review includes a thorough understanding of the feature itself and how the Gainisght environment connects to the vendor AI ecosystem.

- Any new features built internally, and those for which we share the data with another provider, need to undergo security review and approval before going into production.

- All communication with our AI features is API-based communication, and our security team has performed all security checks as part of our API integration. Only whitelisted APIs will be able to communicate with AI vendors.

- Data encryption protocols are in place both for data at rest and in transit.

- Rigorous security reviews are done to ensure we have masking in place before sharing data with our vendors.

- All other security controls such as rate-limiting, monitoring for throttling and segmentation are included as part of our security measures.

-

Product and Engineering Measures

- All AI features are currently turned off by default. Customer’s administrators must activate each feature before we process any data. This is our commitment to data security and transparency.

- Customer data will NOT be used to train any LLMs – either by Gainsight, or our vendors.

- Robust multi-tenancy systems in Gainsight extend to AI. Your data is never used outside of the context of your tenant, for any cross-customer use cases. If we were to do so in the future, explicit consent for participation would be required.

- All emails and phone numbers are masked before data is shared with any of our vendors.

- A DPIA is performed for each and every feature that we launch, to ensure that the data is completely secure.

-

Frequently Asked Questions

- Can you please explain the exact personal data that will be processed?

All these features will make use of a subset of the personal data stored within your Gainsight instance. Since Gainsight integrates with your CRM, this data can include names, job titles, and business contact information (such as physical addresses) of your customers and prospects. Additionally, it can include the names and titles of Gainsight users. It’s worth noting that email addresses and phone numbers are intentionally excluded from processing, as they are concealed or masked for privacy and security reasons. Additional data points may include contents of emails, customer notes and customer-level data from a client’s CRM. - How will the data be used?

All of our generative AI features will solely use your personal data to deliver the specific feature to you. This data is not shared with other clients or other vendors (like OpenAI), and we do not expect this data to be used to train any AI language models (if that changes then we would send out an additional notice to you). - How do you prevent data leaks from one customer to another when getting an answer from the LLM?

The model for the Generative AI features will only be run on Client’s data within a Client instance. No other Gainsight client will have access to Client’s data. - Why are the AI feature toggles grayed out in my instance?

You may not have the ability to turn the feature on from your instance if your privacy and/or legal team sent in an objection to our subprocessor notice. If you would like access, your privacy and/or legal team will need to rescind the objection by emailing privacy@gainsight.com, referring to the prior objection, and specifically mentioning it is being rescinded.

- Can you please explain the exact personal data that will be processed?

Protection Measures

For Companies of All Sizes & Industires